Title: Old data, new forensics: The first second of SN 1987A neutrino emission

Authors: Shirley Weishi Li, John F. Beacom, Luke F. Roberts, Francesco Capozzi

First Author’s Institution: Theoretical Physics Department, Fermi National Accelerator Laboratory, Batavia, IL 60510

Status: Published in Physical Review D [closed]

Nearly 40 years ago, astronomers witnessed the effects of a star’s core turning to iron, inhibiting fusion and causing rapid infall of the star’s gas onto the core until it was nearly the density of an atomic nucleus– nearly 100 trillion times denser than the Earth. This infalling material then ricocheted out in a massive explosion we call a core-collapse supernova. This supernova, dubbed SN 1987A, was not the first supernova we’ve observed, and it certainly is not the most recent, but it was the first and only time we’ve ever detected neutrinos directly from a supernova.

Only a handful of neutrinos were detected– about 20 in all across three separate detectors. One of the reasons we were able to detect the neutrino event at all is because it happened so nearby: a mere 50,000 parsecs (pc) away while most other supernovae we’ve detected are well beyond 1,000,000 pc. Despite the small number of neutrinos recorded by the detectors, the event was crucial to validating our understanding of supernovae. It confirmed that neutrinos escape from supernovae well before light does because neutrinos interact with matter much less frequently than photons do. The number of neutrinos detected, as well as the distribution of their energies, was also consistent with theoretical estimates (within an order of magnitude).

The question then is: are we ready to model and understand the neutrino flux the next time a nearby star goes supernova? The authors of today’s paper address this question by comparing many existing supernova models with each other as well as with the actual data of the neutrino event from 1987A.

The Work

To start, the authors compared the results from four 3-dimensional, five 2-dimensional, and one 1-dimensional supernova simulations that had been developed by various teams over the past decade. In addition to the number of spatial dimensions that each simulation code used, these simulations also varied in quantities like time resolution (the ability to tell the difference between a second and a millisecond), mass resolution (the ability to tell the difference between a kilogram and a tonne), simulation duration, and the level of detail that is included in their physics. Although each code operates differently, they were all given at least one common parameter: the starting mass of the progenitor star was set to be 20 times the mass of the Sun. This stellar mass has been used to model 1987A since it was first discovered.

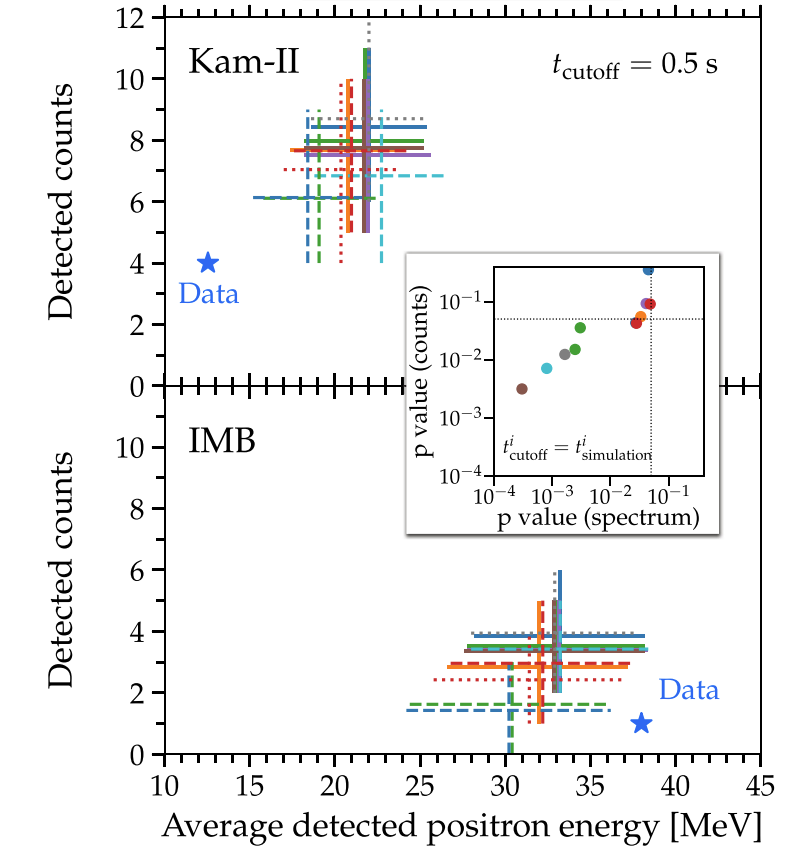

The results from the simulation that the authors are interested in for this work are the number of neutrinos produced and the spectrum of the neutrino energies. This is because these are the quantities that the neutrino detectors on Earth could actually measure. After they get the number of neutrinos that are produced and their energies from the various simulations, they can try to figure out how many of the neutrinos would actually be detected. To do this, they model the sensitivity and energy range of the Kamiokande-II (Kam-II) and Irvine-Michigan-Brookhaven (IMB) neutrino detectors which actually detected the 1987A event.

The results of the various models are shown in figure 1. Impressively, all of the models generally agree with each other! This is amazing considering the range of complexity, parameters, and modeling methods that were used across the ten different simulation codes the authors tested. However, consistency in model-to-model comparisons is where the fun ends. When the authors compare the model results to the data, they find that none of the models recreate the actual neutrino measurements to their statistical standards. More specifically, they overestimate both the number of neutrinos and their energies when compared to the neutrinos detected by Kam-II.

What Gives?

When a model doesn’t recreate observations, it generally means that either a.) you’re missing a key piece of physics or b.) one (or more) of your starting assumptions was wrong. One key detail that was previously excluded from the models is that neutrinos are fickle. They’re born with one of three “flavors” based on the particle they originate from, but scattering processes can cause them to switch flavors in a process called neutrino oscillation. Kam-II and IMB are only sensitive to specific neutrino flavors, so if the neutrinos change flavors on their way from the supernova to the detector, the number of neutrinos you expect to detect changes.

To address this, the authors re-run the simulations, but allow electron-flavored neutrinos to oscillate to the other flavors. This decreased the predicted number of detectable neutrinos, but also increased the energy that we would expect the neutrinos to be; the models are still in disagreement with the data.

Okay, neutrino oscillations didn’t fix the problem. Maybe one of the starting assumptions was wrong then. The authors had originally assumed that the progenitor star had been 20 solar masses, but what if that wasn’t right? To test whether the progenitor mass might be responsible for the disagreement between models and data, they rerun the simulations with progenitor masses between 15 and 27 solar masses. However, the simulated neutrino counts were still too numerous and too high energy to agree with the data. So what’s missing?

The short answer is: we don’t know! When it comes to an event like a supernova, there is a daunting number of parameters that can be varied. The environment is also both extreme and obscured– we can’t directly observe the physics that are happening, and that makes it incredibly difficult to know what physical processes are at play. Perhaps a process we haven’t even conceived of is responsible for the discrepancy! Perhaps two stars were involved instead of one! Maybe our picture of neutrino production is wrong!

As we wait for the next closeby supernova to occur, the authors and others will be fervently at work honing models of neutrino production, propagation, and detection. Hopefully by the time it happens, we’ll be ready with the theory to explain the observations!

Featured image credit: adapted from NASA Goddard Space Flight Center

Astrobite edited by Kat Lee