- Title: Towards Building a Crowd-Sourced Sky Map

- Authors: Dustin Lang, David W. Hogg, Bernhard Schölkopf

- First Author’s Institution: Carnegie Mellon University

- Status: Presented at 17th Annual Conference on Artificial Intelligence and Statistics

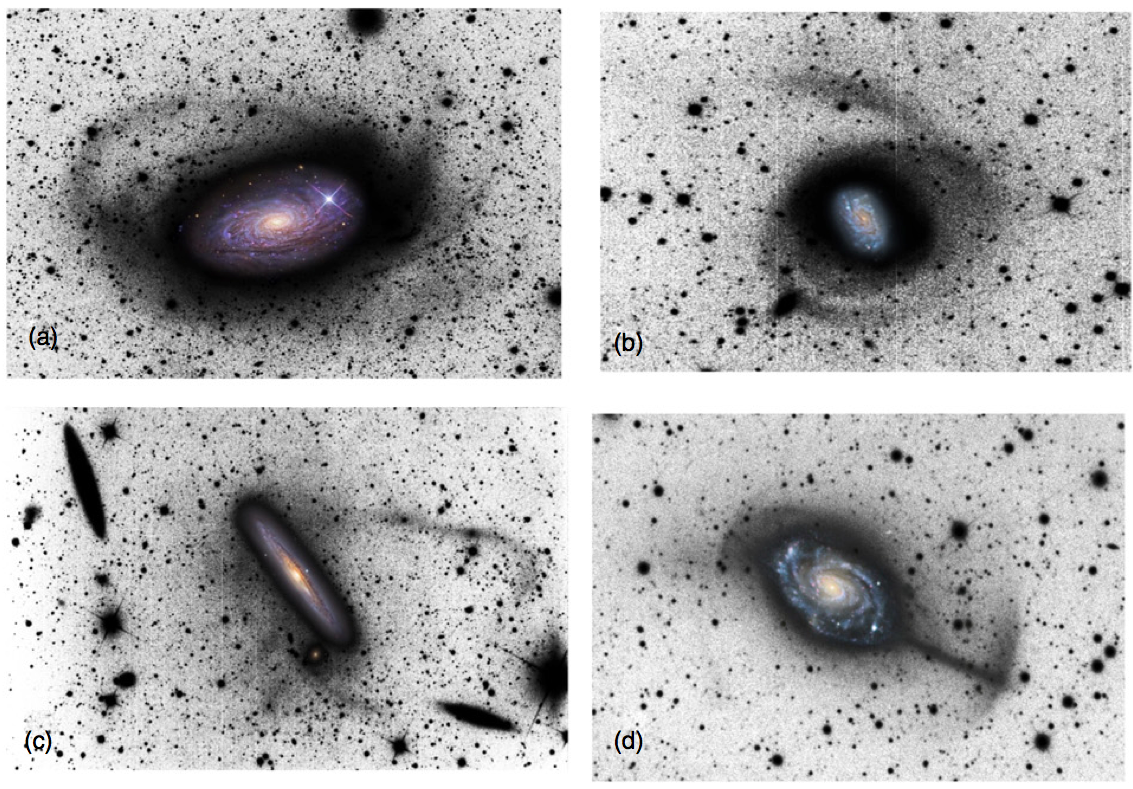

Figure 1. Long-exposure images of faint tidal streams around massive galaxies. These are remnants of previous mergers. The catalog numbers are (a) NGC 5055, (b) NGC 1084, (c) NGC 4216, (d) NGC 4651. The colored inset images have been added for reference. These images are taken from the study by Martinez-Delgado et al.

I live in Northern Idaho, where it is very sparsely populated, a great place for star viewing. Recently I was so struck by the bright swath of the Milky Way that I took my fiancé’s camera and snapped a great picture of…nothing: it was too dim. I leaned over and showed her, and she did a little magic with a dial and a button and we took another. This time we set the camera on a rock, and the shutter stayed open for several minutes. The result was a clear field of stars–but still no lovely swath of galaxy, that would have required an even longer exposure.

Professional astronomers battle the same problem. In most cases, they’re trying to record much fainter sources of light than we were. Deep images of distant galaxies usually take several hours of exposure time. That’s hard to get, but the science is worth it. Take as an example recent observations by Martinez-Delgado and colleagues which have revealed previously unknown tidal streams around normal looking massive galaxies (Fig. 1). These images strengthen the case that all large spiral galaxies have had a history of mergers.

Today’s authors present a way to get deep images without telescope time. Their method involves a clever compilation of sky images from the Web. The algorithm, called Enhance, synthesizes a collection of (usually) short-exposure images gleaned from the Web to produce a deep image.

This is much harder than it sounds. Images have different noise backgrounds. Some have text on them. Some have saturated regions (pixel brightness is maxed out). They might be false-color, representing x-rays or radio light. Worst of all, most digital images have had nonlinear filters applied (like gamma correction), so that the luminosity of each patch of sky cannot be recovered from pixel values.

But Enhance circumvents all these problems by focusing on the pixels themselves, bringing out subtle contrasts in both bright areas and dark areas. Enhance allows a large pool of images to ‘vote’ for the rankings of each pixel’s brightness, whether or not that pixel is representing optical or infrared or some other wavelength of light. Color images are broken into red, green, and blue components and treated individually. The consensus image is built from the pixel ranking that most images ‘agree’ upon.

As a demonstration of their method, they gathered images of NGC 5907 from the Interweb, literally searching for “NGC 5907” and “NGC5907” on flickr, Bing, and Google. Then they used automated astrometry software to register each image to its celestial coordinates, discarding those that failed, or those that were images of different patches of sky. They resampled the remaining 298 unique images onto a rectangular grid centered on the galaxy, and finally histogram-matched them. An image histogram displays the distribution of brightness values across the image. So this last step sloshed pixels up and down in brightness until there was the same amount of light and dark in each image; but, note, each pixel preserved its original rank in order of brightness. The resulting images were fed to their voting algorithm.

Lang and coauthors chose this particular galaxy for their demonstration because it is known to host faint tidal streams, but only recently known. (Actually the discovery was made by Martinez-Delgado and colleagues from above.) To pour water on the altar, they manually removed any reproductions of the recent long-exposure images by Martinez-Delgado et al. from their input pool. As you can see in Fig. 2, Enhance readily uncovered the tidal streams from the 298 input images in which it is not, or only faintly visible!

Figure 2. Images combined into a consensus image by the Enhance algorithm. Example consensus images on the bottom, source images on the top right. Upper left: the 11hr-exposure image from Martinez-Delgado et al. Upper right: 8 representative images out of the 298 harvested from the Web. Bottom: 3 consensus images generated by running different permutations of the images through the Enhance algorithm. Notice how robust the algorithm is against different orderings. Also notice how the consensus image shows the faint stellar stream almost as well as the long-exposure image.

There are some interesting technical details to the Enhance algorithm. For instance, as I mentioned, the images must be registered to their celestial coordinates. This is done by an automated web service called Astrometry.net. It was developed by two of the authors. You can use it too! In exchange for uploading an image to their server, you receive a version annotated with all the interesting objects in your field of view.

I’d still rather step outside to look at the Milky Way. But it’s exciting to discover that Enhance can build scientifically interesting images from all the bits laying around on Web.