Title: No s is good news

Authors: Nathaniel Craig, Daniel Green, Joel Meyers and Surjeet Rajendran

First author institution: Department of Physics, University of California, Santa Barbara

Status: Available on ArXiv

Large Scale Structure (LSS) cosmology is the study of the distribution of matter and the growth of structure in the Universe, which we can observe to measure cosmological parameters like the density of matter or to test theories of gravity. Observations of the LSS contain new information about neutrinos, whose behaviour in the early Universe impacted the growth of the structures we see today.

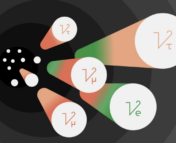

Neutrinos are a fundamental particle in the standard model that are not well understood. Initially they were believed to be massless, but the discovery of neutrino oscillations in 1998 (in which neutrinos switch between the three known different flavours called electron, muon and tau neutrinos) led us to understand they have non-zero masses. This is because the fact that oscillations occur implies the neutrino species have differences in their mass. Since the oscillation experiments let us measure what the mass differences are between types, but not the absolute masses of each type, they allow for a lowest possible number for the sum of masses of the three types. The limit is 0.058 eV.

A problem with neutrino masses?

Neutrinos impacted the LSS we see today by behaving like radiation and carrying energy as they propagated freely in the early Universe. This suppressed structure growth on small length scales, then the neutrinos slowed down and began clustering with matter. The change of their behaviour from radiation-like to matter-like also impacted the Universe’s expansion dynamics because matter and radiation impact the expansion rate differently.

Due to these effects, LSS data can constrain the sum of neutrino masses. Until recently, the best constraints on the sum of neutrino masses came from the Planck measurements of the Cosmic Microwave Background (CMB), with additional constraining power coming from measurements of Baryon Acoustic Oscillations (BAOs). These measurements suggested with a high level of confidence the sum of masses eV.

However, new measurements of the BAOs from the Dark Energy Spectroscopic Instrument (DESI) plus the Planck data have constrained the mass sum to eV – and tend to prefer

eV – although we expect them to have a non-zero mass! This could suggest a theoretical issue with our understanding of the impact of neutrinos on LSS.

The authors of today’s paper consider various different ways this too-small result might appear. We might need to consider new physics that allow neutrinos to behave differently, which would impact the neutrino mass we expect to see. Currently, constraints from cosmological data are model dependent, so if our modelling is neglecting key physics that alters the behaviour of neutrinos, we could obtain biased constraints. Alternatively, the models are ok, but there are issues relating to our measurements of other cosmological parameters that may have almost an opposite signal to massive neutrinos.

Modelling negative neutrino masses

In the modelling for the constraints reported by DESI + Planck, the parameter representing

is not allowed below zero (which makes sense – how can neutrinos have negative mass?) However, it is possible to create an effective parameter that represents neutrino mass. This parameter can mimic the effect of the neutrino mass signal, but can be allowed to have negative values. This allows the model to explore what the data prefers, even if the result is unphysical in the context of the model. The authors modify a code used to calculate observables of the Large Scale Structure to include such a parameter. They find that the data from Planck + DESI prefers a negative value for an effective neutrino mass parameter that is allowed to vary below zero, with a preference for -0.16 eV, seen in Figure 1.

Explanations within the standard model?

The authors first discuss possibilities that wouldn’t involve us introducing new physics. When we constrain , we have to consider how much knowledge we have about other parameters that may be correlated to what we are trying to measure. These parameters need to be constrained at the same time as

in an analysis. Some of these parameters include the optical depth to reionization, which represents the probability of CMB photons being scattered by electrons after reionization, and the matter density,

. If we are underestimating the true value of these parameters it could cause us to obtain lower values for our measurements of neutrino mass. These possibilities may not necessarily be likely; the authors note that in recent years, the measurements of the optical depth have become slightly lower than previous historical measurements but measurements of the matter density have been fairly stable. Measuring the CMB can be difficult due to foreground contamination so if there are systematic errors in CMB measurements of the optical depth, it would be hard to tell. Finally, one can consider that the strong bounds on neutrino mass are only so constraining within the context of the standard cosmological model,

CDM. They become much weaker when we consider models with more freedom in the behaviour of Dark Energy for the BAO analysis.

Beyond the Standard Model

In scenarios where we consider solutions Beyond the Standard Model (BSM), we consider that the neutrino mass signal might be biased due to short-comings in our models that describe the behaviour of the neutrinos. The authors discuss various ways this might occur, some of which are summarised here.

- Decays – Neutrinos might decay into some other kind of particle, a process which is not predicted by the Standard Model. The neutrino lifetime would need to be such that they were able to freely propagate in the early Universe (since we see evidence for this occurring in observational data). However, it also needs to be short enough, so that they don’t decay too slowly, in order to suppress the signal of neutrino mass. This scenario has limits on the possible masses the neutrinos can have and the strength that the neutrinos can interact with the particles they decay into, to satisfy current observations.

- Annihilation – In this case, neutrinos annihilate with each other into some other kind of particle. The efficiency of the reaction needs to satisfy certain requirements for reasons similar to those discussed for the limits on possible decays. For example, the neutrinos need to annihilate into particles with particular mathematical properties for their interactions and there are limits on the possible interaction strengths.

- Heating and cooling – If neutrinos had a different temperature over time (their current temperature is estimated to be 1.95 K), by being much hotter or colder, this would change how they behaved. By being colder by a factor of 10-1000, they wouldn’t freely propagate for as large a length scale as we expect them to, and this would reduce the neutrino mass signal we see. Due to observational constraints they would have to cool in the later Universe, possibly by interactions with dark matter, which under certain circumstances could behave like a sink for the heat. If the neutrinos were instead hotter, by a factor of about 100, they would not eventually cluster with matter and there would also be no massive neutrino signal due to freely propagating neutrinos. This could be possible if there was a process allowing something else to transfer energy to the neutrinos; dark matter is again a candidate.

- Have Dark matter with a long range force – If there was a stronger force between dark matter particles only, we would expect this to have observable implications for gravitational dynamics and thus the clustering of matter in space. If this occurs and subsequently increases the clustering of matter on different scales, it might impact the massive neutrino signal we expect to see.

Summary

Overall, the authors present a number of interesting solutions to explain the measurement made by the combination of the Planck CMB data and the DESI data of such a low neutrino mass constraint. While there is still work to be done, it is fascinating to know that cosmological observations that allow us to learn something about the history of the Universe are able to help us constrain models of particle physics.

Edited by: Lindsey Gordon

Featured image credit: Image adapted from ‘A sky full of galaxies’, CCA BY 4.0, https://commons.wikimedia.org/wiki/File:A_Sky_Full_of_Galaxies.jpg via Wikimedia Commons