We hope you’re enjoying our beyond posts, going beyond astronomical research and beyond published papers to talk about issues facing astronomers and astronomy in general.

I’m going to go a step further, and talk about an issue that directly affects not only anyone in astronomy, but also anyone who consumes any form of science media. Heck, it concerns anyone who consumes oxygen.

I’m going to talk about a paper that shows us how intractable our opinions can become, and how taking in more information may not actually free us from our biases.

You may be surprised to see a bite in the first person, but there’s a reason for this. It’s easy to talk about bias as a problem other people suffer from. But I’m sorry to say that I exhibit this bias, and I’m not the only one. I hope to convince you we all do.

I hope it’s enjoyable reading, but I fear it may not be. I found this paper unsettling and uncomfortable, and all the more important for that.

Title: Climate-Science Communication and the Measurement Problem, open access

Authors: Dan M. Kahan

First author’s institution: Yale University – Law School

Status: Published in Advances in Political Psychology (2015)

There’s a simple, comforting assumption that we often make about people of opposite opinions to our own: that they don’t have enough information to come to the correct conclusion (ours).

It’s a fundamental, and laudable, tenet of rationalism that, given more and more information, people will converge on the same conclusion. That when we’re at odds with someone, we could, given time and resources, convince them of our viewpoint by educating them, showing them facts and figures to gradually change their mind.

It’s a utopian ideal, that an educated, critical and literate population would share the same beliefs and values.

But what if that weren’t true?

What if the more we know, the more resolute the lines we draw between our side and theirs?

What if, well, this:

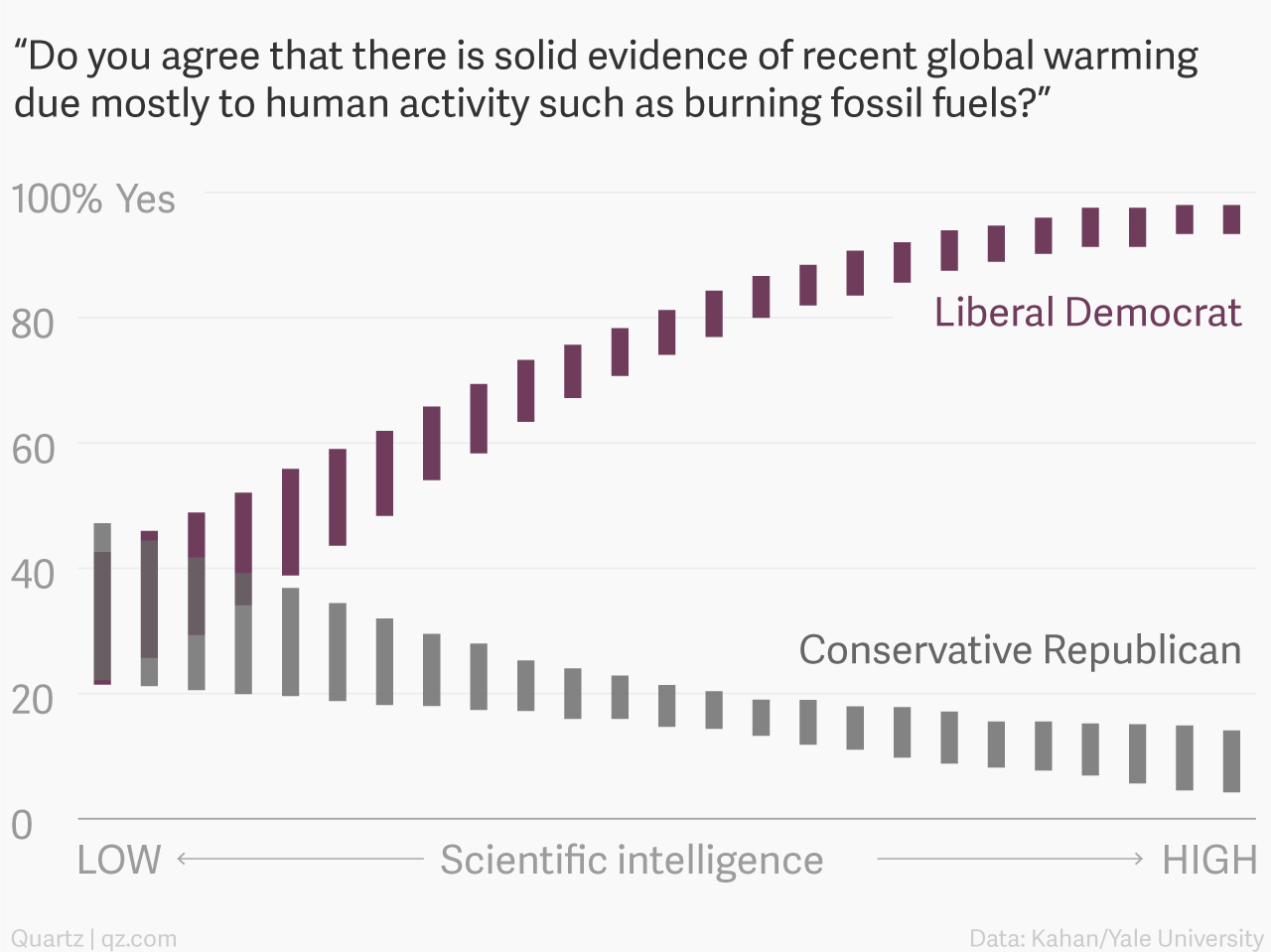

A figure from Kahan’s paper, redrawn beautifully by Quartz for clarity. It shows how the fraction of people in left and right leaning groups that agree that there is evidence for human-caused climate change. The more science and information the two groups know and have been exposed to, the further their opinions diverge.

Here we see two groups, one that politically leans left, and the other right. As we move from left to right we go from people with low science literacy (based on a series of 18, increasing difficulty general science questions) to high. We’re seeing the fraction that agree there is strong evidence of human-caused climate change.

Perhaps it’s not shocking to see liberals tend to believe in human-caused climate change, whilst conservatives do not. But what is shocking is that the higher your science literacy, the more cohesion there is within your group, and the more your group diverges from the other.

The more these respondents knew, the more consistently they believed their side. Those who were fervently denying human-caused climate change were not doing so from a lack of information, but a glut.

I believe in human-caused climate change. Why? Because I’ve seen lots of evidence that I believe confirms my belief. I might have hoped that, shown the same evidence, others would come to the same conclusion.

But what if I had started out in the opposite camp, and learned all I have learned about our effect on our planet? I might now, this plot would suggest, be a well-informed and unwavering climate change denier.

Not all the of these researchers’ results were this worrying. When they asked what scientists believe, rather than what than what their evidence showed, the two sides converged.

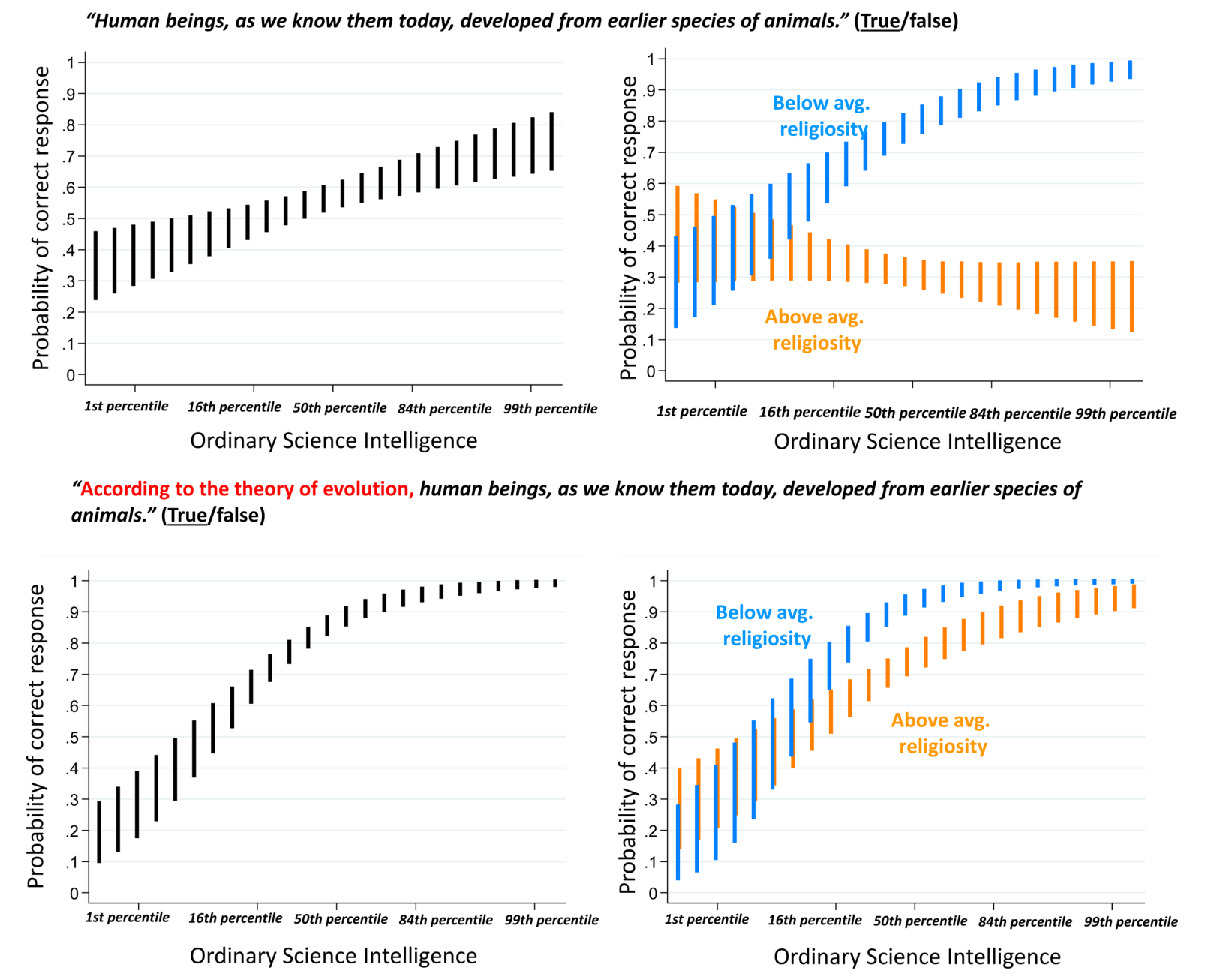

From Kahan’s paper, comparing the responses from all respondents (left) and between groups of strong religious belief to those with little or none (right). As the two groups become more science literate, they diverge on their own belief in evolution, but roughly agree about what the theory states.

But when the question is directly challenging the subjects’ beliefs, and is mired in as much controversy as climate science, people of any opinion are able to find patterns that support their views. They’re able to naysay and discredit, at least to their own satisfaction, evidence contradictory to their views. They highlight and commit to memory anything that confirms their opinion, and leave what they don’t agree with by the wayside.

And when I say “they,” I suppose I really mean “I.”

I’m doing this as resolutely as anyone. I’d barely entertain the notion I might be wrong about climate change. I selectively read, remember, and believe evidence of our impact on our planet. I don’t even really know what the major arguments against climate change are.

I’m not saying I want a conversion, but right now I’m not even equipped for a conversation.

The science of changing someone’s mind is a complex, fascinating one that perhaps we’ll explore further in the future.

But let this stand alone as a warning: information alone is not always persuasive. It’s tied up in the politics of those disseminating it and the views of those receiving it. Facts and figures without context and empathy are difficult to comprehend and easy to misinterpret.

When we present data on its own, we may see our own narrative within, but others may see theirs. Who’s to say which is more valid? Too much data can be as confusing and abusable as too little.

What information we do share, be it with colleagues, students, or the public, must be accompanied by thoughtful and clear explanation. And in an argument when we do stand on one side, unleashing a sheer barrage of information is not likely to persuade the other.

This is obviously important for anyone writing and speaking about science, but also for anyone reading and listening to it. You may be able to find evidence that supports your conclusions in almost any discussion, regardless of its intent. And you may isolate yourself from evidence that does not fit your views, regardless of its validity.

You may cherry-pick the conclusions you draw, even when you believe you critically seek information from a wide variety of sources.

I might have. I may. And probably, I will again.

There’s little that can be done but be aware of our own limitations, in the arguments we form and present. And to be aware and empathetic to those limitations in others.

There’s more than a little irony in isolating ourselves from the other side of an argument.

Dear Zephyr Penoyre

I enjoyed reading your comments in astrobites, I find the sociological discussions to be meaningless. I would suggest you look at a book by Dr Robert Laughlin called “Powering the Future”. In particular, look at chapter 2 call Geologic Time.

Best Regards, Jim

As a scientist, one must form opinions. As a scientist, one must keep an open mind. I value skepticism as a mechanism to keep an open mind. I was skeptical in 1964 when climate scientists were predicting a returning ice age, global temps having declined from 1945 until 1975. Difficult for me to reverse direction.easily. Need an explanation for the drop in temps, increasing amounts of ice, etc. BobPM

But this diversity is not a bad thing. If human act purly reasonable, and everyone have the same conclusion, that will be horrible. It’s like AI ruling the world.