Title: Rediscovering orbital mechanics with machine learning

Authors: Pablo Lemos, Niall Jeffrey, Miles Cranmer, Shirley Ho, and Peter Battaglia

First Author’s Institution: Department of Physics and Astronomy, University of Sussex,Brighton, BN1 9QH, UK

Status: Under review [preprint on arXiv]

The list of topics once assumed to be the exclusive domain of human creativity which later fell to the cold computation of neural networks is long and getting longer. Today’s authors might have just scrawled another onto the quickly filling page: they used machine learning to discover a law of physics.

Now, this was a law we already knew about, and for a long time: Newton’s law of universal gravitation. However, even though this (re)discovery is a topic covered in most high school physics curricula, this is a thrilling first step into using machine learning to help formulate new equations to describe our universe. Understanding how they coaxed a computer to play “scientist” and deliver this result is worth a deeper dive, so let’s dig into the paper!

Computer Coaching

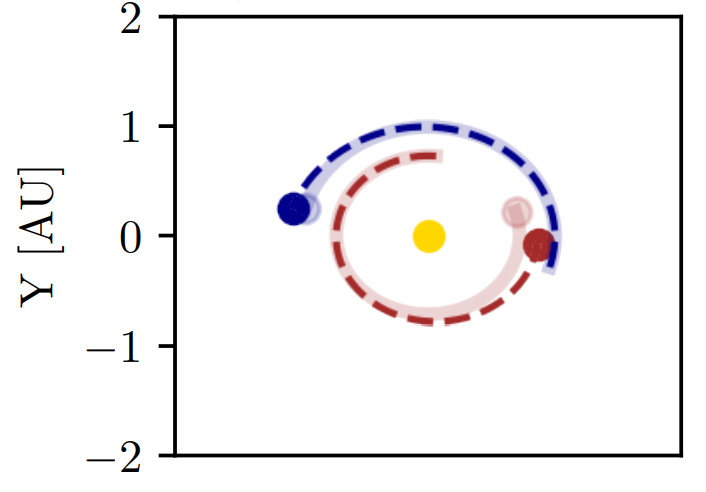

To start, the authors first had to teach a computer how to move the planets. To do this, they gave a program called a graph neural network the locations of every major object in the solar system over thirty years and asked it how they’d move in the next three. The program went off and fiddled with different “weights” dictating how each object affected the others, then made a guess about their trajectories. If it noticed that nudging the weights in one direction made the guesses better, it’d keep moving them that way until its guesses were as close to right as they could get.

After lots of the machine-equivalent of hard work and studying, their network “learned” to emulate the motion of the solar system’s components. To be clear, it didn’t “know” anything about gravity, space, or any of the various theories crafted to explain our changing night sky which have been proposed over the millennia. Even so, this ignorance did not limit its performance: if you fed this computer the positions and velocities of the planets at a certain time, its fans would whir and its lights would blink and eventually it’d tell you their accelerations thirty minutes after that given time to within 0.2% of their actual value. Everything else in this paper aside, that’s an impressive feat. Although graph neural networks have been trained in the past to replicate simulated astronomical data, this is one of the first times this type of code has managed to ingest information about the real world, then accurately predict what would happen next.

Several Steps Back

It’s worth stepping back and considering this stage of their project, not from an astrophysical or machine learning perspective, but with a more philosophical bent. What is our goal in all of science? It’s surprisingly difficult to answer this question with specificity beyond generic thoughts such as “to learn/understand our universe”, even (and possibly especially) for scientists. You might say something like “to find patterns which allow us to predict the future and explain the past”, and that sounds solid enough that we can run with it here.

But, doesn’t this neural network allow us to do just that? Sure, it’s a “black box” made up of thousands of weights and complicated linear algebra, so we can’t exactly write it out on a page or even understand how it comes to its conclusions. But, whatever it’s doing, it’s clearly doing it well. In principle we could use this network to predict eclipses and moon phases, meaning it works just as well as scientific theories from 2,000 years ago.

That isn’t very satisfying though. All of our scientific intuition tells us that a “theory” is something which can be written down, a mixture of mathematics which can be complex but is ultimately understandable and ideally elegant. Although the neural network can functionally do many of the things we’d like from a scientific theory, it certainly doesn’t meet this expectation.

Translating from Computer to Human

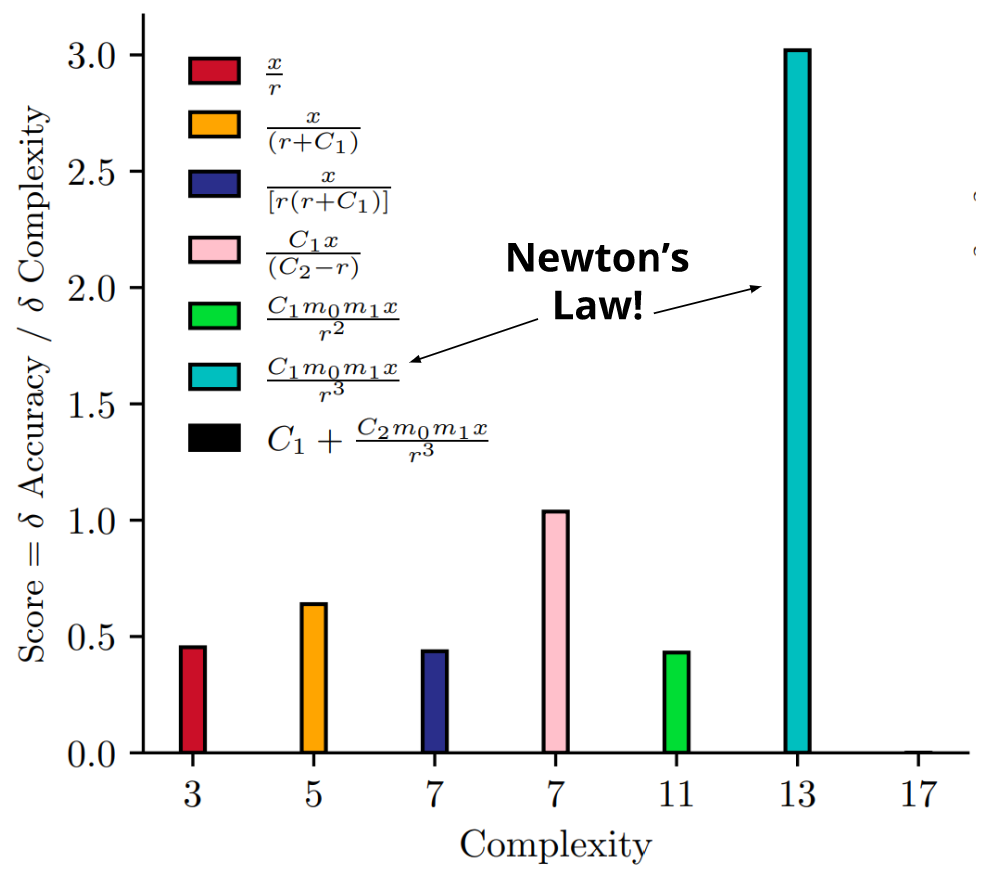

Acknowledging this, the authors move to the second phase of their process, which was to convert the knowledge acquired by their graph neural network into a form more appropriate for human consumption. To do this, they turned to a technique called symbolic regression, also known as automated equation discovery. This mildly intimidating sounding technique is indeed complicated enough that they leave its explanation to other papers, but luckily the big picture is digestible for non-specialists. Essentially, this new algorithm tries out a bunch of different types of equations, trying to recreate what the graph network already knows but only by using simple combinations of mathematical operators. For every guess, it checks how well its equation matches the expected result, then does some self-reflection and assesses how “complex” its guess was. It’s looking for something that’s both accurate and simple: in the end, it made over 100 million guesses, and decided about 7 were worth showing to the authors.

And what do you know! The guess it was happiest with, the one which best balanced accuracy with simplicity, is exactly Newton’s law of gravitation.

With this equation in hand, the authors went the extra mile and plugged it back into their graph neural net so that it could estimate the masses of everything in the solar system. It did a phenomenal job, correctly weighing the planets to within 1.6%. With the correct masses and underlying law, their network could now predict the motions of the planets as well into the future. Their program had not only “learned” physics, it then applied it to build an accurate model of our solar system.

What now?

This article contains all the ingredients for sensationalist but hollow hot takes- “AI can replace scientists” or “machines learn physics” are both somewhat correct here, but boiling away the nuance both hides the limitations and cheapens the excitement behind these methods. Beginning with the limitations, the authors candidly admit several. For one, although this program “learned” some new physics, it had to be “taught” some important other laws to get there: the authors had to hard-code in Newton’s second and third laws, as well as rotational equivariance. For another, the final equation it spits out is the product of balancing accuracy with a somewhat arbitrary metric of “complexity”. If someone wanted a super simple formula, for example, they could tweak that metric and cause the algorithm to consider, then dismiss, Newton’s law.

As for excitement though… These authors pulled off something of a magic trick. They trained a computer to rediscover one of the greatest scientific achievements of classical physics, and they did it with algorithms which in theory can be applied to some of the greatest outstanding questions in modern physics. We’re probably never going to give a computer some telescope images and get in return a simple equation describing dark matter, but it’s very possible that someday this technique will help us reach that goal.

The authors summarize it well themselves: “Ultimately we believe our approach should be viewed as a tool which can help scientists make parts of their discovery process more efficient and systematic, rather than as a replacement for the rich domain knowledge, scientific methodology, and intuition which are essential to scientific discovery.” This is an exciting new tool, and I’m excited to see how it’ll be modified and applied in the future.

Astrobite edited by Katy Proctor

Featured image credit: Figure 1 in the paper