- Title: The Nature of Scientific Proof in the Age of Simulations

- Author: Kevin Heng

- Author’s institution: University of Bern, Switzerland

- Status: Published in American Scientist

In this short critical essay, a computational astrophysicist, Kevin Heng, questions the movement of his field toward more complex models producing larger volumes of data. Toward the end of his essay, Heng poses some open questions to the simulation community. “Is scientific truth more robustly represented by the simplest, or the most complex model?”, and, “How may we judge when a simulation has successfully approximated reality in some way?” To understand these questions let’s go back to the beginning of his essay for the context.

How Science Works

One framework for discussing how science works (that is, how it teaches us about nature) is to think about how it is practiced. Heng points out that science has always been carried along by two streams of practice: theory and experiment. The theoretical stream emphasizes models: a model (maybe expressed as an equation) predicts something, and it may be proved false if that something doesn’t happen. The experimental stream emphasizes observations. For an observation to be useful to science, it must be reproducible.

Simulation as a Tool

A sort of marriage of these two streams appeared last century, as scientists began to use computers as tools to expose big-picture predictions of very complex models. Today, these scientists simulate Nature by choosing a set of governing equations and computing the future of some set of initial conditions. The product of one of these simulations may be a large data set, like that from the recently highlighted Illustris Project, or its predecessor, the Millenium Simulation Project. Scientists may then ‘mine’ the dataset for patterns, just as astronomers mine real observational datasets, such as the Sloan Digital Sky Survey. To do science with simulations of this scale, specialists have arisen, some to engineer the code, some to design numerical experiments, and some to process and interpret the output.

It’s obvious that this new practice is a powerful tool. In astrophysics simulations are especially useful, because the heavens aren’t very manipulable. On a computer, we can rearrange stars, and dial time forward and back. Also direct computation gives us insight into models that are opaque to analytical thought. We just don’t know the implications of very complex models until we simulate specific scenarios on a computer.

Weaknesses of Simulations

But Heng points out some limitations of this tool as well; simulations have some fundamental weaknesses.

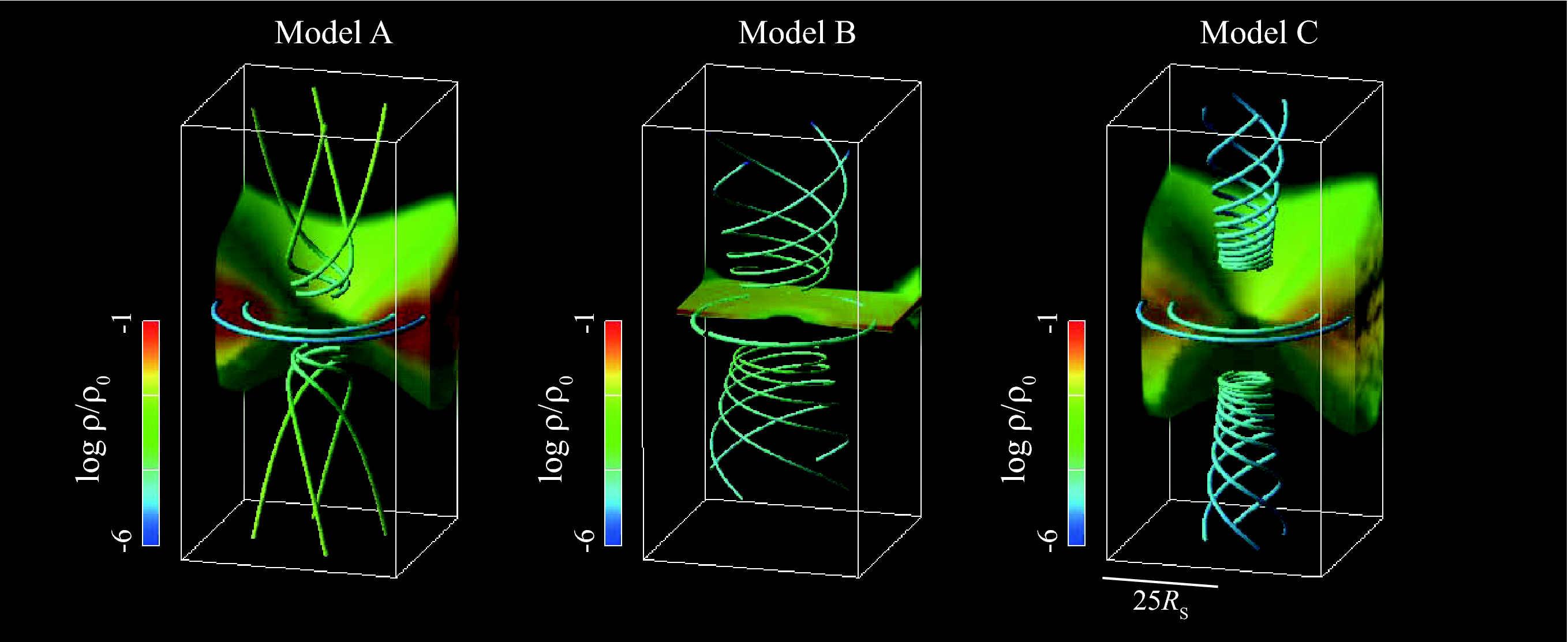

One weakness is resolution. In computer simulations, continua (like time and space) are approximated by discrete ranges. Time steps may be small, and spatial resolution may be high, but there will always be phenomena that occur on even smaller scales, deemed sub-grid physics. When simulating an accretion disk rotating around a black hole, for instance, the tension of magnetic fields drives turbulence on such small scales that many simulations cannot resolve it. And this sub-grid turbulence may play a crucial role in the overall lifetime and energy output of the disk. To describe sub-grid physics, phenomenological models are often used. For instance, in the case of magnetic turbulence, simulations often include an ad-hoc α-parameter describing the bulk viscosity of the disk.

Another weakness is sheer complexity. Simulations seeking to mimic nature trend toward increasing complexity. A climate model with clouds is more faithful to Earth’s atmosphere than a model without. But as simulations get more complex, and their numerical methods become more nuanced, they have a tendency to become black boxes: astrophysicists may find it easy to put in initial data and get out a future state, without understanding enough of model or method to discriminate between a real effect and a spurious artifact.

Wielding Simulations Well

Heng emphasizes two essential ingredients in useful astrophysical simulations: reproducibility and falsifiability. Good simulations are published with enough information to make them readily reproducible. The OpenScience project, encouraging computational scientists to make their code publicly available, is one example of a movement in this direction. And good simulations are based on models that can be proven wrong. Some models have so many free parameters (like the alpha-viscosity parameter for accretion disks) that they will always be able to reproduce results seen in Nature. Heng quotes the noted computational mathematician/physicist John von Neumann, “With four parameters, I can fit an elephant and with five I can make him wiggle his trunk.”

I’m a computational astophysicist, like Heng. And I find his critiques to be challenging and exciting.

Trackbacks/Pingbacks