“One of the problems we face in the United States is that unfortunately, there is a combination of an anti-science bias that people are, for reasons that sometimes are, you know, inconceivable and not understandable, they just don’t believe science and they don’t believe authority… And that’s unfortunate because, you know, science is truth. And if you go by the evidence and by the data, you’re speaking the truth.”

-Dr. Anthony Fauci speaking on the Learning Curve podcast

Here at Astrobites, our goal is to communicate science with astronomy enthusiasts, whether they are pursuing astronomy as a career or not. In an era of easily accessible false information and growing communities of conspiracy theorists, finding credible science can be difficult and communicating scientific findings accurately becomes increasingly vital.

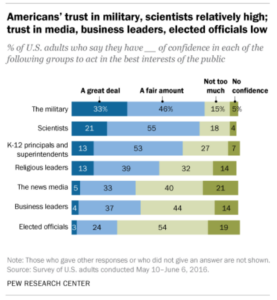

An important aspect of science communication is cultivating public trust. A 2017 Pew Research Study found that scientists are trusted more than K-12 principals, religious leaders, the media, business leaders and elected officials (see Figure 1). But it seems like we hear people denying scientific findings around every corner. The goal of this Beyond Astrobite is to examine why people don’t trust science and what to do about it.

Figure 1. Results from a 2017 Pew Research Study that explored trust levels in various professions. Surprisingly, scientists rank relatively high compared to a lot of professions.

Single Studies Get Sensationalized

News outlets or other sources will often reference a single study to back up a claim (one well-known example of this is the Wakefield experiment that linked the MMR vaccine to autism). Sensationalization of single studies is detrimental to trust in science. If a study is retracted or if later research challenges a previous paper’s conclusions, then the public is left in a state of confusion over whether the findings were real or not. People hear one thing at one time, then a differing answer at another time, and they stick with the result that makes them the most comfortable. Recently, this has even happened with the Center for Disease Control in the U.S. when they changed their official recommendation to suggest that all Americans wear masks during the pandemic. The important thing here is not what was said initially or in a single study, but what has been found in the scientific consensus about mask-wearing. If there is scientific consensus about a topic that means a majority of scientists agree after rigorously checking each other’s work, and that is the kind of science that deserves our trust.

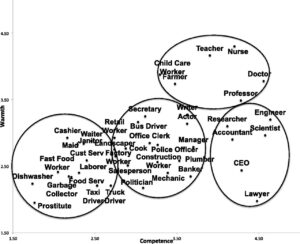

Scientists Are Smart, But Not Warm

Scientists may rank towards the top of trustworthiness in Figure 1, but there are other factors that may harm how people receive their research. A 2014 study suggests that for a communicator of any kind to be considered credible, they have to express both expertise and what the authors call “warmth.” The study surveyed Americans about certain jobs and found that scientists fall into the category of “envied” jobs—competent but cold, respected but not trusted (Figure 2). Without half of the necessary ingredients for credibility, it is difficult for scientists to gain public trust.

Figure 2. Warmth (otherwise defined as trustworthiness or friendliness) versus competence (capability) for various jobs rated on a 1-5 scale from a 2014 study of Americans.

No One Wants to be Wrong

Some of the distrust in science may stem from the media or scientists themselves, but much of it comes from the individual. Biases play a role in how everyone perceives information. A 2017 study found that many people rely on their intuition and seek consistency between evidence and their own beliefs, leading to the misinterpretation of information. A separate study from earlier this year found that people are more likely to reread information if it disagrees with their beliefs and are more likely to misremember numbers (for example, the number of Mexican immigrants to the U.S.) if they contradict their beliefs. There’s even something that’s called the backfire effect: sometimes when people see evidence that contradicts their opinions, they become even more set in their ways.

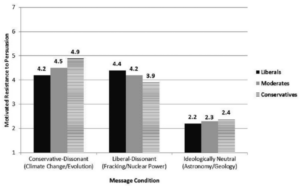

Personal bias plays a huge role in interpreting and trusting science, and according to a 2015 study, it does so for both sides of the political spectrum. Whether you’re conservative or liberal, you are more likely to believe science that backs up your already held beliefs. Really, it just depends on the issue. Figure 3 clearly demonstrates this, as conservatives are more resistant to persuasion about climate change and evolution and liberals are more resistant to persuasion about fracking and nuclear power.

Figure 3. Resistance to persuasion about different topics for liberals, moderates and conservatives from a 2015 study. The study asked 1500 participants to answer questions about the objectivity of a science article on a 1-7 scale. The higher the number here, the more resistant the group to the information in the science article. The leftmost cluster represents concepts that are generally more against conservative views (climate change and evolution), the center cluster represents concepts that are generally more frowned upon by liberals (fracking and nuclear power) and the rightmost cluster represents generally neutral sciences like astronomy (woo!) and geology.

Education also doesn’t protect you from misinterpreting the facts. A 2014 study found that the higher someone’s science literacy, the more consistently they believed their side of the political spectrum. A 2008 study on how people view nanotechnology found that the more information they were exposed to, the more polarized their opinions on the technology became. Along the same lines, a 2013 study found that the higher someone’s numeracy, or ability to understand and work with numbers, the more polarized their views became. Believe it or not, higher levels of education and knowledge do not translate to unbiased beliefs.

How to Help

So how can we, as scientists and science enthusiasts, actually get past all the biases and misinformation to get our point across? Although this is a hard problem with no one single solution, one way to help is to change the way we communicate.

There is evidence that the language people use and the way they support their claims may make an argument more likely to be heard. A 2016 study looked at the ChangeMyView subreddit to see what aspects make an argument more effective at changing someone’s mind. The authors found that including links to evidence (huh, weird, did I do that?) makes an argument more likely to succeed, while too intense a counterargument will fail. The study found that overall, even on a forum specifically designed to alter opinions, only 30% of original posters changed their minds. But hey, that’s better than nothing!

In a TEDx talk, science communicator Emily Calandrelli makes one point very clear: scientists need to be nicer. When we insult someone, their ability to consider our argument basically disappears. Calandrelli argues that we need to consider our audience, determine what they actually care about, and frame arguments around that. Empathetic approaches that really delve into the feelings behind an opinion will be way more effective than dismissing someone’s opinions as uneducated or outright wrong. Remember that everyone comes into a conversation with their own life experiences and their own beliefs. Communicating science is important, but how we communicate it is even more important!

Distrust in science stems from multiple sources: the media, scientists themselves, and mostly from inside our own brains. Recognizing where the distrust comes from can help us address our biases and the biases of people around us in an empathetic and effective way.

We would like to acknowledge that we are summarizing research outside of our field. While we are trained astronomers and physicists, and practiced writers of paper summaries, we are not experts in social science research. We have done our best to capture the findings of this literature accurately and respectfully, but do defer to the original papers and to the authors of the studies. “Beyond astro-ph” articles are not necessarily intended to be representative of the views of the entire Astrobites collaboration, nor do they represent the views of the AAS or all astronomers. While AAS supports Astrobites, Astrobites is editorially independent and content that appears on Astrobites is not reviewed or approved by the AAS.

Truth is philosophically difficult to establish. Perhaps, like the IPCC reports, we can discuss probability of ideas being more likely to be correct or less likely, as approximation to ‘truth’.

I find fig. 3 very confusing. It seems that libs and cons are both highly resistant, and about equally so, towards “controversial” topics, regardless of whether they liked the article’s message or not. The biggest difference was between “controversial” and non-controversial topics. It would seem that everyone is highly resistant to “controversial” facts, even if they agree with them. So what gives?

This reminded me of my high-school lessons in rhetoric, the ancient art of persuasion. Aristotle divided it into three broad branches: logos, pathos, and ethos. Logos is rational appeals to reason; as budding scientists, this is the ideal we are taught to strive for, that all arguments should be about the facts, nothing more, nothing less. Between specialists this isn’t a bad stance to take, however, for communicating with a broader audience I don’t think the other two branches should be discounted. Pathos is, broadly speaking, emotional appeals, and while—as a scientist—I do think there’s a danger in taking this too far, perhaps injecting a little emotion into communicating findings with the public could help with that perceived “warmth” issue. (A little humor goes a long way, in my experience!) And finally, the third branch is ethos, which is essentially an appeal to the audience based on faith in the speaker’s character—in a word, trust. I don’t have a magic bullet for increasing people’s trust in scientists, unfortunately, but I think being visible in your broader community—outside of the narrow scientific community—and getting to know more people who aren’t fellow scientists (and serving as a positive example of a “scientist”) would probably help.