It only takes a quick peek at the TOP500 – the ever-updating list of the world’s top (public) supercomputers – to make you feel pretty good about our accomplishments as a species. As of this writing the current world leader is Tianhe-2, a Chinese supercomputer posting a demonstrated performance of 33 PFlops, followed by Oak Ridge’s Titan (17), Livermore’s Sequoia (17), RIKEN’s K Computer (10), and Argonne’s Mira (8). Those numbers are measured in petaflops, or 1015 floating point operations per second. Yet computational physicists are already looking to the next milestone on the horizon: exascale computing, or supercomputers whose performance peaks in the exaflop (1018) range. Take a moment to appreciate that. 1018 floating point operations every single second. Exascale computing has the potential to open whole new frontiers in simulation codes, and astrophysicists will be right there on opening day to model everything from galaxy evolution to the birth of a neutron star. But the leap to exascale won’t be the same as the jump from tera to peta. The truth is, we need to get a lot better at parallelization before we can successfully compute at the exascale level.

Go back to those speeds I listed at the beginning of this article. Confession time: I lied to you a little bit there. TOP500 clocks supercomputers by measuring their performance on the LINPACK benchmarks. But even LINPACK doesn’t claim to reach the maximum theoretical speed of a machine, and most practical codes fall far, far short of these numbers. Don’t drop your n-body simulation code on Titan and expect it to run at 17 PFlops, or anywhere near that. Why don’t programs make full use of the machine? Because a code would have to be perfectly parallelized to do so – that is, every single processor fully stocked with instructions and data on every clock cycle. The reality falls far short of this ideal.

In many cases, it should. Good parallelization requires that the problem be divisible into largely independent subunits, and some problems are simply not amenable to it. Even when they are, the best way to do so may not always be the most obvious. It’s a complex and subtle art that owes as much to the way you phrase the problem as it does to actual programming. If the different portions of the problem need to talk to each other, as in the case of cells in hydro codes that need to know the values of their neighbors, or if global operations have to be done, parallel performance can go right out the window.

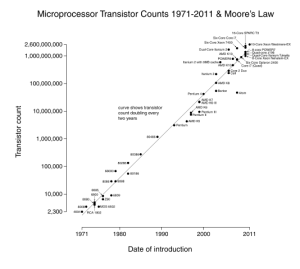

So parallelism is a critical component of good performance on supercomputers. But this has always been true. Why is it any more important now? Well, there’s a common misconception that Moore’s Law dictates processor speeds. It actually states that the number of transistors that can be packed into a chip will double every two years. For a long time this resulted directly in increased chip speeds. But if you’ve built your own machine in the last decade you may have noticed that individual core speeds have stalled out in the 2.5 – 3.0 GHz range. Increases in (apparent) processing power have instead come from chipmakers packing more and more cores onto each chip. The average desktop processor now has at least 2, generally 4, sometimes 8 cores. However this means that, for high performance computing (HPC) purposes, reaping the benefits of increased chip power now requires parallelization. Back in the day, when a code needed more horsepower to be useful, Moore’s Law allowed us to simply sit back for a few years until processing speeds had increased. Even if your program didn’t parallelize all that well, the individual serial processors would still get faster. Now that’s no longer true. So as commercial performance gains become more and more driven by increased parallelism, simulation codes must take advantage of it in order to see their own performance increase.

The problem of hardware parallelism leads directly into the second major challenge of exascale computing: I/O. The biggest bottleneck strangling modern simulation codes is not actual calculation but I/O operations, particularly communication between processors and memory. Laborious optimization can squeeze every last nanosecond from the core, but as soon as a load from memory is required everyone involved might as well go get a sandwich. The slow speed of fetching data from memory is a harsh damper on even the most spirited of codes, and it does not look to be improving anytime soon. Cores have long ameliorated these effects with the use of caching, but this poses its own set of challenges, with implications for both hardware and software design.

Communication overhead does not stop at the core level, either. Anyone who has used MPI, the message passing protocol traditionally used to communicate between the nodes of a supercomputer, can tell you that at large numbers of processors (>10,000) the time the code spends talking amongst itself begins to match or even exceed the time it spends actually computing. At that level the speed and topology of the interconnect between nodes is as much a factor in its performance as the power of the actual nodes themselves. The less a problem is parallelized – the more the individual subunits need to talk to each other – the more time it will have to spend syncing memory and moving data.

What does all this mean? Fundamentally, at the moment we still have trouble programming petaflop computers properly. We can get some good results and they’re certainly more powerful than their predecessors, but we’re far from making maximal use of the machines. On a certain level, we never will – as I mentioned, some problems just don’t parallelize. But I don’t believe we’ve gotten nearly the performance we can out of our existing codes, either. And with an exascale machine, these issues will only amplify. We can build a machine that will hit the exaflop mark on LINPACK or some other suitable benchmark, but true exascale computing will require taking parallelism to the next level. It might even require rethinking our whole approach to designing simulation codes.

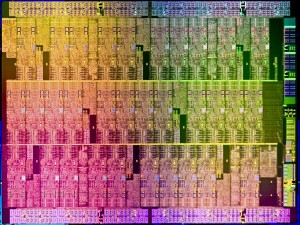

The Aubrey Island die for Intel’s new MIC (Many Integrated Core) architecture. MIC, which uses many (32-64) simplified execution units, is a compromise between fully-featured cores and the minimal streaming units of GPUs. (Image credit: Intel.com)

The interest in GPU computing has spurred some attempts to rethink design processes, and libraries built to hide the complexity of farming out GPU-friendly calculations promise good performance gains for minimal restructuring. Intermediate architectures like Intel’s MIC promise better parallelism without the steep learning curve of GPUs. But it may be the case that numerical methods and even the fundamental design decisions of simulations will need to be revamped. For instance, consider a convolution integral. It might seem fastest to just solve the integral with any one of the many numerical integration techniques available. However, if very fast parallel computing resources are available, it may be more efficient to fast-Fourier-transform the equation, perform a multiplication in Fourier space, and FFT back to the time domain. At the moment, simulation codes are based around the same fundamental abstractions physicists use to think about problems. But perhaps those abstractions are not the way the computer should be thinking about the problem.

Better parallelism will likely be aided by software tools that can detect opportunities for it. A good compiler already does a fantastic amount of legwork in rearranging instructions, caching values, and vectorizing repetitive operations for optimal execution. Perhaps software tools will soon be ready to move up another level and aid in architecting the code itself.

This is all ignoring the most immediate problem of exascale computing: building an exascale computer. The electricity usage alone would effectively require the machine to have its own dedicated power plant, possibly nuclear. Petaflop machines are already plagued by the continual failure of components; even if the mean time between failures is quite long, the sheer number of moving parts in a machine of that size means components fail more or less continually. Codes must be “soft error tolerant,” meaning they can recover from momentary data corruption caused by transients in power or performance. And I’m certain there are dozens of challenges we won’t find out about until we actually begin to build it.

Let this not be read as pessimistic. Computers are certainly not going anywhere, and neither is physics. We ought to build an exascale computer. We will build one eventually, and then we will build several, and then we will move on to the zettascale and the yottascale and so forth, assuming we have not all ascended into some sort of immortal datasphere before then. But the problem is no longer as simple as sticking more nodes in a room, or even packing more cores on a die. Better parallelization of codes, faster memory and better cache management, and novel hardware architectures will all be part of reaching that goal. And when the exaflop machine arrives, we should ensure that our codes are ready to make proper use of it.